The Gist🔗

Two years ago I started a business for quantified hiring for tech companies to hire engineers. Last Thursday I shut down the main server that runs candidate submissions. And nobody screamed.

Update, Monday — someone screamed.

The Beginning🔗

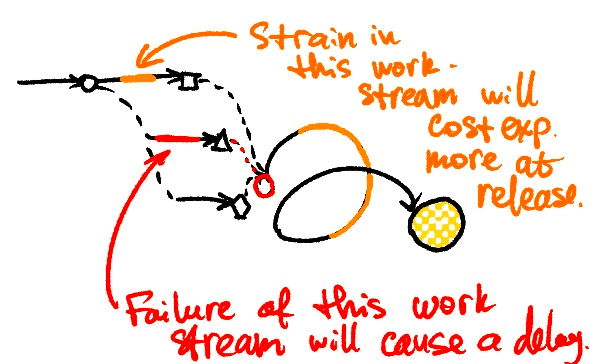

In 2017, the consultancy I co-founded (Serokell) had trouble finding good candidates, and we were resorting to headhunting. That would put strain to delivery of projects for our customers and while we weren't failing at it, we had to put in contingency plans to make sure that if there is lack of performance, we can still deliver.

Hiring candidates out of small pools caused a strain on the delivery of projects.

But there was a better problem to have beyond the horizon we were yet to face! Since all the companies I make are mission-driven, Serokell was no exception. Serokell's mission was to make well-typed functional programming languages default choice for new software projects. We wanted to make this true for the whole software industry!

To achieve that, we started a compiler department which was tasked with contributing to Glasgow Haskell Compiler. Unsurprisingly, we became quite popular!

During the first hiring round of 2018, we have received hundreds of applications, but we didn't have a process for this. It switched the problem from finding candidates to ranking them.

After we gained popularity, hiring became a problem of choosing the right candidate.

Empowered with the legendary "hiring post", which is widely known in narrow circles, instead of hoping for best, I figured out how to make a process like the one described there work.

What I did was to make a test that used all the libraries and approaches that we were using in Serokell. With the methodology, I was aiming even higher than a binary check "fit / unfit" - I wanted to rank candidates by their hard skill performance level. To do that, I have devised a problem which has an optimal solution, polynomial asymptotic running time of which is large enough for it not to be possible to compute the results on bigger data sets in a reasonable time.

I have developed a test that ranks candidates by their hard skill performance level.

The test is untimed and the candidate can use any resources they want. To check for possible cheating, there was a checklist of questions for the technical interview, which would be asked to the top performers.

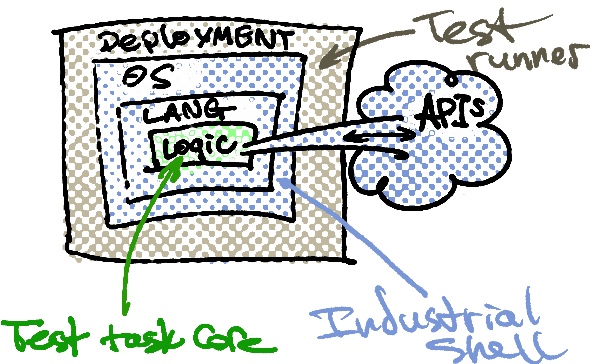

To avoid running the tests manually, I have written a program that would sandbox and run the tests automatically and gave HR department a UI to upload submissions and see the results.

What Went Wrong?🔗

The downfall of my startup was that nothing went wrong. Since 2018, we have been using this methodology to hire people, and we haven't hired a single person we were unhappy with. What is more, there was only one situation when we hired a person to a position of "wrong seniority", when the person has overperformed in the test, but they lacked experience of team work required to be a senior developer.

The approach solved my problem, so I assumed it will solve it for everyone else.

Stellar success has blinded me into believing that I will be able to convince companies that this methodology is worth paying for.

Turning Methodology Into A Product🔗

I knew from the first-hand experience that there are many companies that fail to deliver projects because of bad hiring decisions. My joking scare-tactic sales pitch is only half-joke.

So when I have reduced my work in Serokell, I figured that I should make a product out of this methodology. I prototyped a bit, then I hired two engineers and a designer, and started working on automation tool.

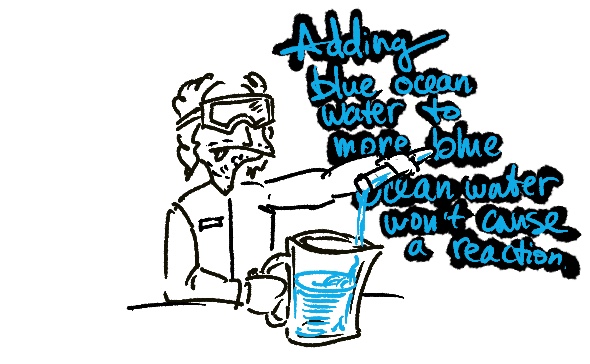

My hypothesis was that the only way to sell this methodology to companies is to make it as fast as possible to run the tests. My other hypothesis was that given that our methodology is blue ocean, we can amplify it by serving a niche where even tech skill screening is impossible, i.e. Hackerrank and Leetcode simply don't support a technology of interest. Under that hypothesis, I figured, I should try selling in that niche.

This is why, when I found what I felt a good prospect who repeatedly failed to deliver a Unity3D project, and after confirming that Unity3D hiring is a blue ocean squared, I decided to add it to the MVP tally.

I decided to amplify the novelty by serving a tiny niche without competitors.

Finally, I knew that with the part of the methodology which revolves around inserting deliberate deficiencies into test task skeletons, I'm onto developing onthology and taxonomy of soft technical skills. So, when I tested the interest during networking events, I found that HR people are eager to learn about diligence and the capacity for nuanced understanding of the candidates they hire. Thus, I added soft skill evaluation to the MVP tally as well.

I made three completely new tests tasks:

- Single-player test task for Rust and Scala (my niche, I know Rust developers I thought it will be easy to sell to them).

- Multiplayer Elixir test task (the MVP of the product was using Elixir and the problem domain was low-latency multicore programming in the field of and soft-realtime systems).

- Unity3D test task (The blue ocean squared. I gathered an insight from people working in Unity3Dthat the way they hire is by using universities with blessed courses as talent farms).

I have implemented an automatic test runner, which sandboxed the submissions reasonably well, but I didn't obsess over all the edge cases. In fact, I still have a backlog of things that are required for the degree of sandboxing I'd be happy about within the threat model.

My employees have implemented an CQRS/ES evaluation queue in Elixir (but without using static analyser properly) and a UI in React.

I have made a lot of test tasks before I sold a single one.

It took us a year to get to the point where we have a working MVP.

Part of the reason for it was that my employees and I were taking on contract work while we were developing the product.

Another reason was that while working on it on the technical side, I was also undertaking bursts of, admittedly unsustained, sales effort.

On the technical side of things, the reason for that was scoping. Consider the moving parts of the MVP:

- Build timeouts.

- Evaluation timeouts.

- Output checking.

- Threat modelling and sandboxing.

- Integration of runner with the queue.

- Fault tolerance of the queue.

- System resource limits and automatic cleanups.

- Statistical modelling of submission result accuracy.

- Ensuring the statistical significance of the results (see "Benchmarking: You're Doing It Wrong" by Aysylu Greenberg). Slides.

- Implementing free-for-all multiplayer runner for Elixir test task.

- Implementing a Swiss tournament runner for Unity3D test task (which I gladly managed to design and delegate to an employee).

Making a system which benchmarks other people's code is hard.

Remember that Unity3D prospect I talked about? While working on the technical side, we have got close to onboard that customer as a paying customer! It didn't pan out, see "What Went Wrong?".

Gladly (or sadly), it wasn't all doom and gloom, as one of the companies that contracted us for a project has agreed to try out our tool. The trial run was a huge success too, where the person hired has exceeded the expectations of the company.

What Went Wrong?🔗

The main problem, of course, was the failure to run the company as a product business. Even though I read Jaime Levy's "UX Design", which is low-key more important than Lean Startup, I failed to implement the user persona procedures and conduct user interviews before committing so much time and my own money into building the system.

I have also failed to reduce the scope of MVP. The brilliance of the offering was a novel methodology, not the system that runs it. As you will see in the "First Customer" section, it was possible to sell the methodology without complete automation.

In terms of scoping, an astute reader might have noticed that the most mainstream technology we are offering test tasks for is Rust. Which is not that mainstream! I became obsessed with the idea of selling to niches to a degree where I forgot the most important principle of startup idea evaluations: sell to the largest market possible. Niche considerations come as the second optimisation step.

Don't let niche considerations limit the market you address off the bat.

Finally, I have failed to correctly assess the technical risks of using untyped language to build a system of this complexity. Or, to be completely fair, I have failed to instill the culture of writing maintainable code. So the key technical failure was that I have mandated using CQRS/ES (an unexplored architecture for me in Elixir specifically) and thus I had no practical ways to ensure that the code is maintainable.

In terms of tactics, in case of the Unity3D prospect, I have failed to focus on convincing the company that our methodology and our particular test task will actually bridge the gap between where they are and where they want to be. My downfall was that when their CTO asked me how our test task implements game lobby logic, I have told them that it's a simple private lobby which starts the game when enough clients are connected. Meaning that we didn't go with any fancy lobby libraries, and just rolled out our own trivial implementation. When the CTO said that they want this to be customised, instead of politely explaining that while having a test task that resembles their setup completely would be great, it won't test skills better than our test task as-is.

I became the customer's servant, instead of their guide.

Even worse, instead of sending an offer to the managing director, who is non-technical, and who have shown interest in the task, in the language of the managing director, I made an offer which uses some technical language. I should have given them two simple to understand options. Instead, I have created a modular offer where they could pick and choose the parts they want. You can see the offer I sent, but please be aware, it's very cringe.

The First Customer🔗

As an add-on to a contract we were doing for Yatima (which became Lurk Lab, and now is Argumentcomputer), we have offered them to help with hiring.

We weren't doing anything related to sourcing, but we offered them to run their candidates through our test tasks.

At that point, we didn't have the "MVP" ready, but we already had Rust test task implemented and checkable.

We ran their candidates through the test task, and they were happy with the results. This felt amazing because the reason I do things is to enable people to do things they couldn't do before. The fact that my methodology not only allowed them to hire the best candidate, but also prevented them from hiring a wrong one (I've seen their submissions, I am sure that the person they hired was the best one) was a huge win for me.

Here I can only pat myself on the back for the way I also collected the feedback, which allowed me to write a banging, deep and honest case study. There was an aspect of it, about which I was in denial, which ended up biting me in the ass.

You can read the testimonials of the business-owner and the candidate, but here are the questions I think it's great to ask.

Eleven Must-Ask Questions While Building Testimonials🔗

- What is our product doing?

- If out product wasn't a thing, what would you do?

- Who do you feel our competitors are?

- Some people compare us to XYZ. How do you feel about that comparison? What are the differences?

- What would you say was the main obstacle that could prevent you from using our offer?

- What did you find as a result of buying our product?

- Name the single most important feature of service you liked the most.

- List three particular benefits of using the service vs not doing anything.

- What about vs using a competitor?

- How would you recommend our service to other companies?

- What would you like to add?

Now pay close attention to the way the customer has answered the question about recommending us to other companies:

Let me say something critical: your tool works well only for IT companies that are already doing the right thing. Teams and companies that are already inclined to have good practices and are doing the right thing. However, those whom I’d consider braindead... It's not gonna be of much benefit to them. If you're not capable of understanding the signal, then the signal is useless to you.

This is...

What Went Wrong!🔗

The customer has said that our product is only useful for companies that are already doing the right thing. It's a very nice thing to hear for a researcher, but it's a death sentence for a product.

My biggest failure here that I failed to process this feedback correctly. I failed to understand that what essentially John said that our methodology and tool is not a product. It doesn't have a niche large enough to get us to that magic number of 10 recurring customers, which we needed to get to be profitable.

Another problem was that I was selling it to the wrong audience in the first place. I have done some unit economy at the start, but it was daydreaming, not a self-critical analysis.

What I failed to understand is that if I have to sell against the status quo to software companies, I will have to excruciatingly punch through the thick wall of "we have always done it this way". Which is fine and great! That's the price you pay for innovation, however companies hire several times a year, so basically the EV of each sale attempt is roughly two euro per year.

Let it sink in. If we sell one time in a thousand, each time we approach someone, the expected value of the transaction is two euro per year.

I didn't listen to the customer feedback properly, but devastatingly, I didn't do the math.

What should have been obvious for me very early on is that instead of calculating my own unit economy, I should have calculated the unit economy of the companies I am selling to.

Which means that, in IT hiring, the only kind of company that I should have sold to is... ...a recruitment agency. To my embarrassment, I have only realised that on accident.

Grasping at Straws in the Burning Hindenburg🔗

One year ago, I was selling at a conference. I was trying to network with the companies who hire a lot of Rusters. I speak their language and it was easy for me to explain what my tool does.

But then, I approached a stand, which turned out to be one of a recruitment agency. I didn't think about it too much, remember, I didn't know I'm selling to them. So I started the conversation with saying that they are not my target audience.

Claiming that some company is not your target audience wasn't a dirty sales tactic for me, although I think it can be one!

We just talked about what they do and what we do. Of course, I have never hidden the secret sauce. As a matter of fact, while our public demo deployment was up, anyone could have not only downloaded the test tasks, but also the test task boilerplate, which was praised so by the developers who had to pass test tasks. So I explained to them the methodology and said "you seem cool and focused, let's see if we can work together".

After they said "okay", we had a couple of meetings and actually we're still talking to them to this day. We have some other prospects in the pipeline, so together these two facts are the reason why this blog post is a pre-mortem, not a post-mortem.

So when I chatted about this to the co-founder of my startup, I actually low-key felt like Michael Scott getting Salesperson of the Year award.

Final chat with them to date was about running a campaign for 50,000 developers. And this is where we are now. With Elixir backend reaching out from the grave and snapping our neck.

For you see, the issue is that we actually can't maintain a high load even in shards. Our MVP works perfectly fine for a single hiring campaign where submissions are sparse.

However, on the day when we got the offer to do the huge run, I have set up stress testing infrastructure and realised that we fail at 20 submissions in queue. The system doesn't crash, but it gets stuck requiring restart.

By then our Elixir engineer has left the company, and while it is theoretically possible to spend a couple of weeks reworking it, I realised that it's the time for the final mistake. A Rust rewrite!

Surprisingly, I have managed to complete the rewrite in a month. While the frontend engineer was upset that I accidentally a whole API; is this dangerous? we are just some page components away from feature-parity with the old system.

Plus now we have Prometheus metrics, which we didn't have before and Grafana dashboards, which we didn't have before!

What Went Wrong?🔗

During the three quarters of existence of my startup, I have developed more features, like generated interview checklists while trying to sell to technical departments.

The reason why I kept at it was that I kept talking to techies, collecitng feedback and iterating. But I was iterating on the wrong thing.

Only after I had a chat with the recruitment agency, I have realised that the only thing that I should have been focusing on running developer contests on my own and allowing recruitment agencies to do the same.

What Now?🔗

I know I've been focusing mostly on negatives in my narration, but it actually wasn't all bad. I have learned a lot about sales and product development. I have realised that I can be an extremely productive developer. It's the first time when in my personal history when I keep my rolling annual GitHub contribution amount to over 1,000. I figured out an extremely efficient architecture for startup prototyping using Rust's axum and sqlx, which I have already applied in a prototype for a SEO product I'm passively working with a friend. There we managed to make a landing and core functionality demo in just one day!

So currently, I'm applying for remote Rust jobs or consulting gigs (if you have any ideas, send them my way over at jI got taught this trick byooscar nn@ beaumontzerohr.io!).

But there are some open questions about what to do with the codebase. I'm leaning towards seeing through the prospects that are in my pipeline right now and if all fails, open-source it.